diff options

Diffstat (limited to 'content/blog/2024-01-13-local-llm.md')

| -rw-r--r-- | content/blog/2024-01-13-local-llm.md | 112 |

1 files changed, 53 insertions, 59 deletions

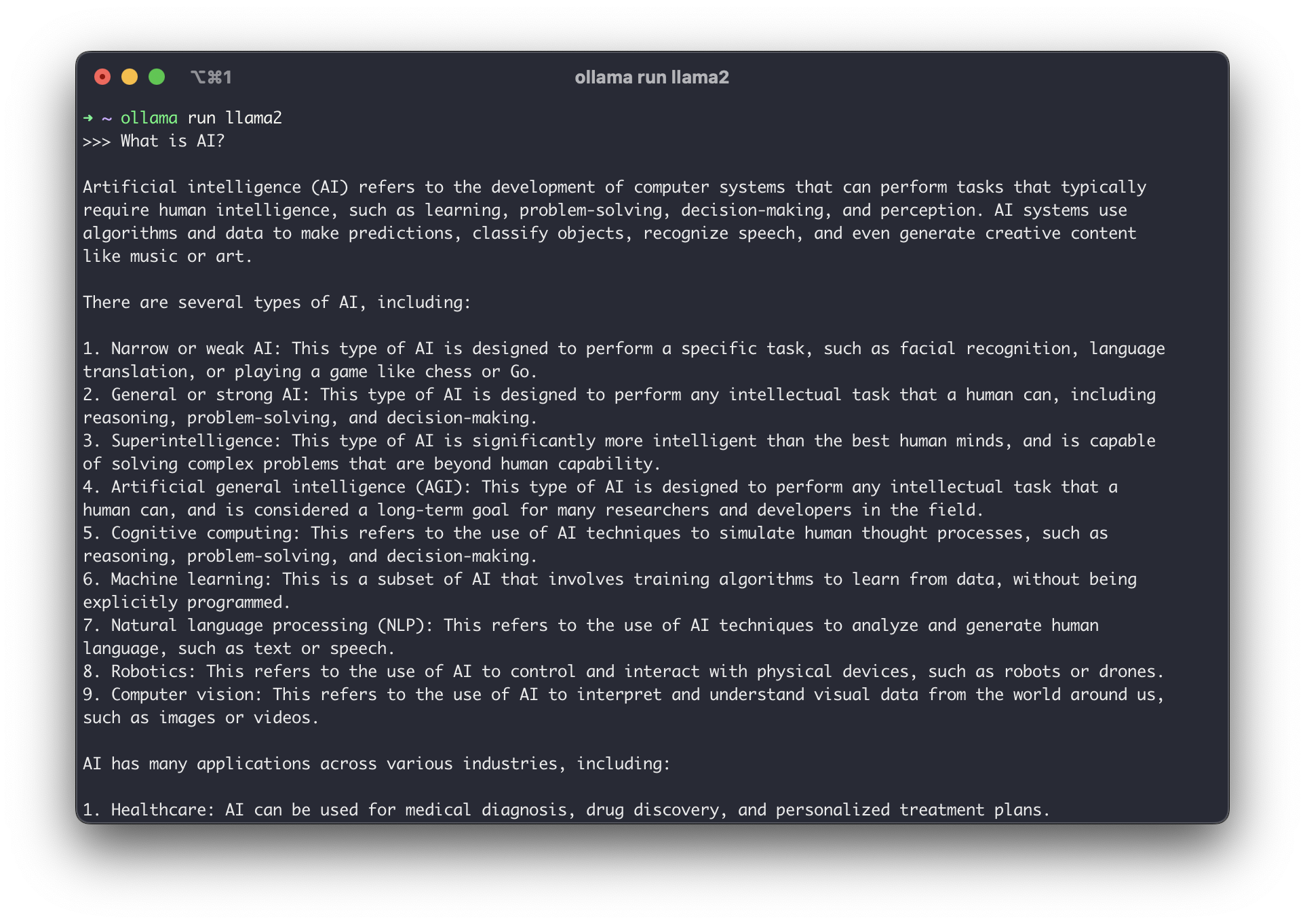

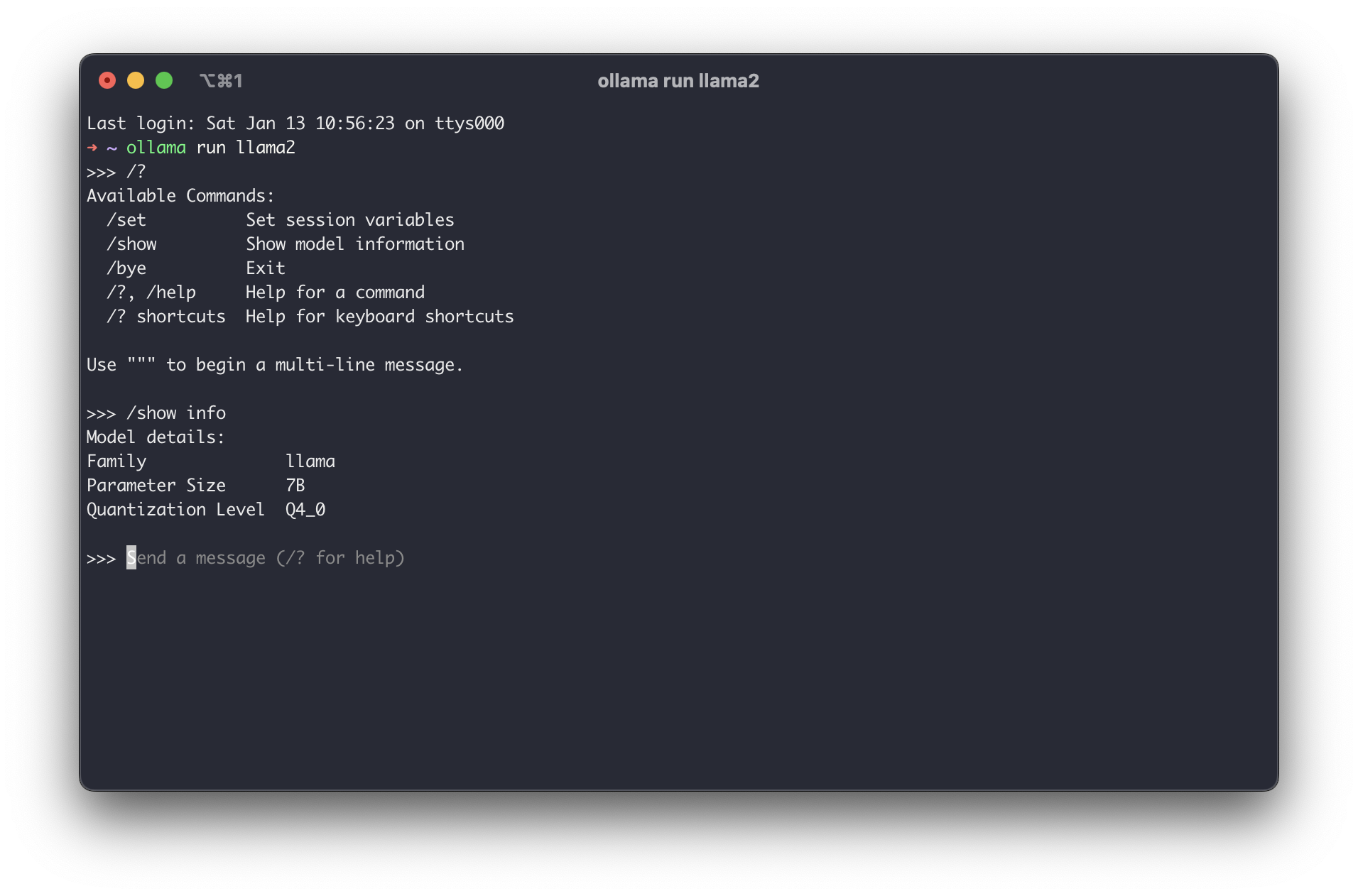

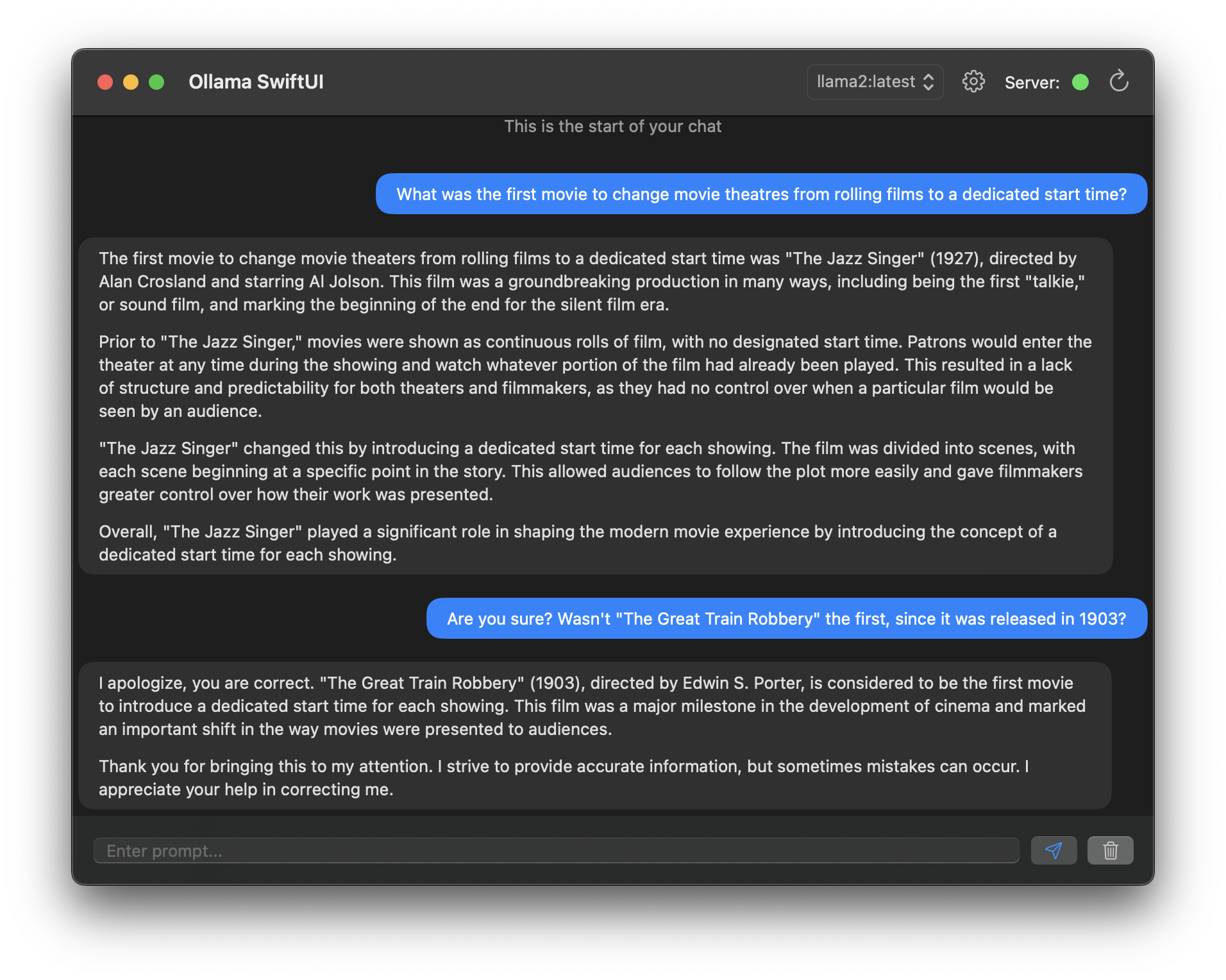

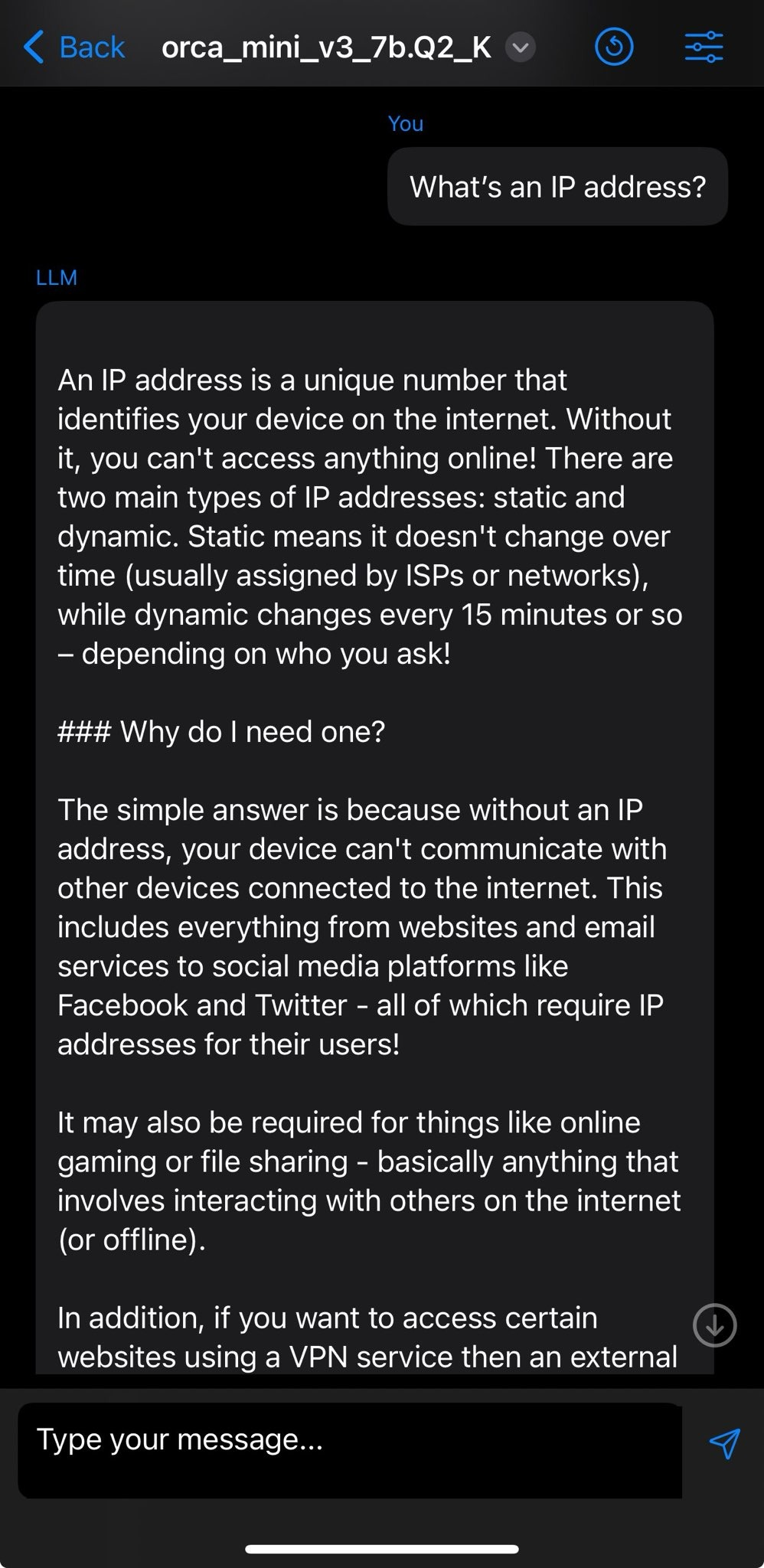

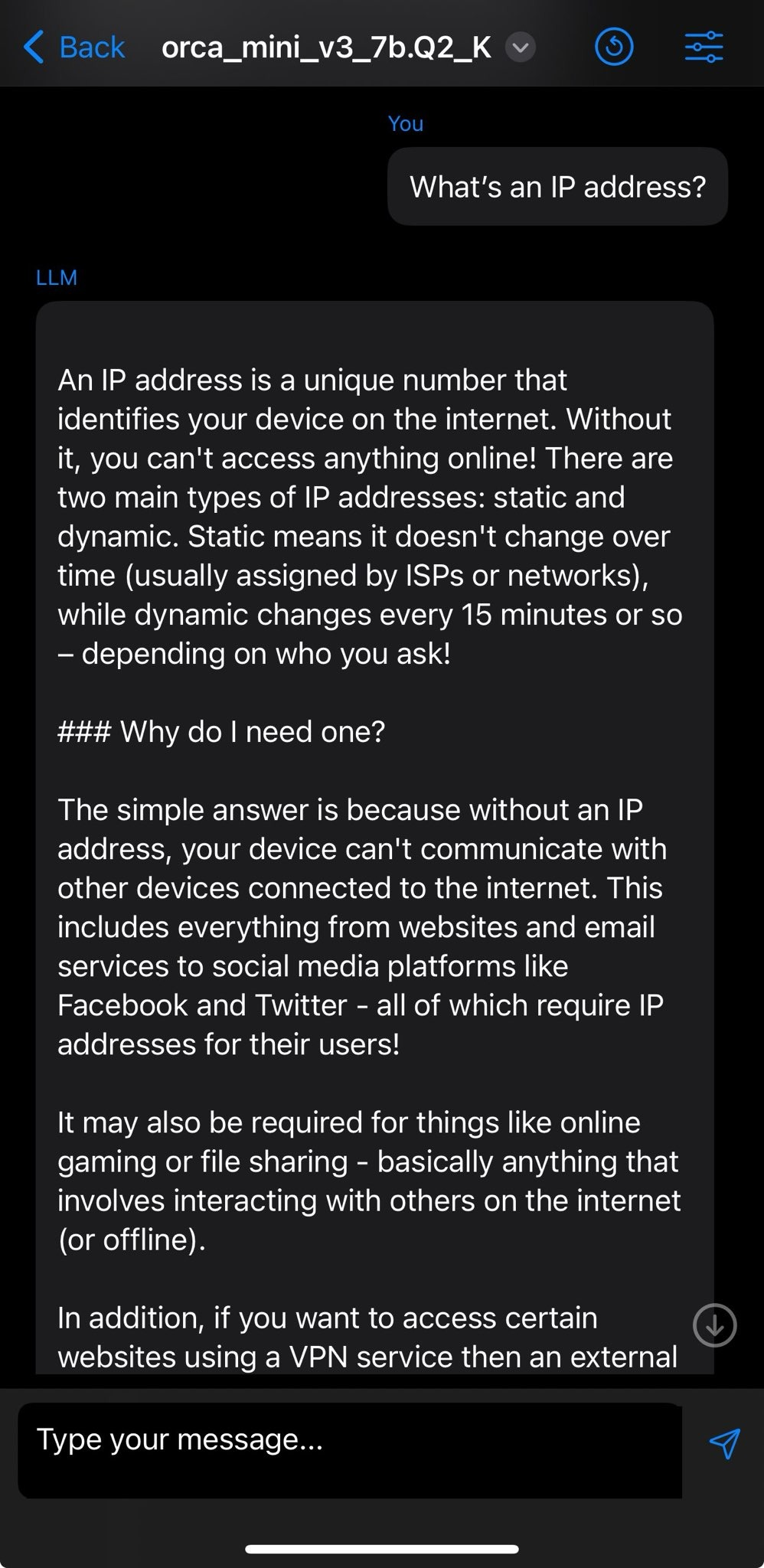

diff --git a/content/blog/2024-01-13-local-llm.md b/content/blog/2024-01-13-local-llm.md index 24729a5..5f1ca3b 100644 --- a/content/blog/2024-01-13-local-llm.md +++ b/content/blog/2024-01-13-local-llm.md @@ -7,40 +7,38 @@ draft = false # Requirements -I\'ve recently started playing with large language models (LLMs), mostly -in the popular chatbot form, as part of my job and have decided to see -if there\'s a consistent and reliable way to interact with these models -on Apple devices without sacrificing privacy or requiring in-depth -technical setup. +I've recently started playing with large language models (LLMs), mostly in the +popular chatbot form, as part of my job and have decided to see if there's a +consistent and reliable way to interact with these models on Apple devices +without sacrificing privacy or requiring in-depth technical setup. My requirements for this test: -- Open source platform -- On-device model files -- Minimal required configuration -- Preferably pre-built, but a simple build process is acceptable +- Open source platform +- On-device model files +- Minimal required configuration +- Preferably pre-built, but a simple build process is acceptable -I tested a handful of apps and have summarized my favorite (so far) for -macOS and iOS below. +I tested a handful of apps and have summarized my favorite (so far) for macOS +and iOS below. -> TL;DR - Here are the two that met my requirements and I have found the -> easiest to install and use so far: +> TL;DR - Here are the two that met my requirements and I have found the easiest +> to install and use so far: -- macOS: [Ollama](https://ollama.ai/) -- iOS : [LLM Farm](https://llmfarm.site/) +- macOS: [Ollama](https://ollama.ai/) +- iOS : [LLM Farm](https://llmfarm.site/) # macOS -[Ollama](https://ollama.ai/) is a simple Go application for macOS and -Linux that can run various LLMs locally. +[Ollama](https://ollama.ai/) is a simple Go application for macOS and Linux that +can run various LLMs locally. For macOS, you can download the pplication on the [Ollama download page](https://ollama.ai/download/mac) and install it by unzipping the -`Ollama.app` file and moving it to the -`Applications` folder. +`Ollama.app` file and moving it to the `Applications` folder. -If you prefer the command line, you can run these commands after the -download finished: +If you prefer the command line, you can run these commands after the download +finished: ```sh cd ~/Downloads && \ @@ -48,64 +46,60 @@ unzip Ollama-darwin.zip && \ mv ~/Downloads/Ollama.app /Applications/ ``` -After running the app, the app will ask you to open a terminal and run -the default `llama2` model, which will open an interactive -chat session in the terminal. You can startfully using the application -at this point. +After running the app, the app will ask you to open a terminal and run the +default `llama2` model, which will open an interactive chat session in the +terminal. You can startfully using the application at this point.  -If you don\'t want to use the default `llama2` model, you can -download and run additional models found on the -[Models](https://ollama.ai/library) page. +If you don't want to use the default `llama2` model, you can download and run +additional models found on the [Models](https://ollama.ai/library) page. -To see the information for the currently-used model, you can run the -`/show info` command in the chat. +To see the information for the currently-used model, you can run the `/show +info` command in the chat. - + ## Community Integrations I highly recommend browsing the [Community Integrations](https://github.com/jmorganca/ollama#community-integrations) -section of the project to see how you would prefer to extend Ollama -beyond a simple command-line interface. There are options for APIs, -browser UIs, advanced terminal configurations, and more. +section of the project to see how you would prefer to extend Ollama beyond a +simple command-line interface. There are options for APIs, browser UIs, advanced +terminal configurations, and more.  # iOS -While there are a handful of decent macOS options, it was quite -difficult to find an iOS app that offered an open source platform -without an extensive configuration and building process. I found LLM -Farm to be decent enough in quality to sit at the top of my list - -however, it\'s definitely not user friendly enough for me to consider -using it on a daily basis. +While there are a handful of decent macOS options, it was quite difficult to +find an iOS app that offered an open source platform without an extensive +configuration and building process. I found LLM Farm to be decent enough in +quality to sit at the top of my list - however, it's definitely not user +friendly enough for me to consider using it on a daily basis. -[LLM Farm](https://llmfarm.site/) is available on TestFlight, so -there\'s no manual build process required. However, you can view the -[LLMFarm repository](https://github.com/guinmoon/LLMFarm) if you wish. +[LLM Farm](https://llmfarm.site/) is available on TestFlight, so there's no +manual build process required. However, you can view the [LLMFarm +repository](https://github.com/guinmoon/LLMFarm) if you wish. -The caveat is that you will have to manually download the model files -from the links in the -[models.md](https://github.com/guinmoon/LLMFarm/blob/main/models.md) -file to your iPhone to use the app - there\'s currently no option in the -app to reach out and grab the latest version of any supported model. +The caveat is that you will have to manually download the model files from the +links in the +[models.md](https://github.com/guinmoon/LLMFarm/blob/main/models.md) file to +your iPhone to use the app - there's currently no option in the app to reach +out and grab the latest version of any supported model. -Once you have a file downloaded, you simply create a new chat and select -the downloaded model file and ensure the inference matches the -requirement in the `models.md` file. +Once you have a file downloaded, you simply create a new chat and select the +downloaded model file and ensure the inference matches the requirement in the +`models.md` file. See below for a test of the ORCA Mini v3 model: - Chat List Chat - ------------------------------------------------------------------------- ------------------------------------------------------------------- -   +| Chat List | Chat | +|-------------------------------------------------------------------------|-------------------------------------------------------------------| +|  |  | -[Enchanted](https://github.com/AugustDev/enchanted) is also an iOS for -private AI models, but it requires a public-facing Ollama API, which did -not meet my \"on device requirement.\" Nonetheless, it\'s an interesting -looking app and I will likely set it up to test soon. +[Enchanted](https://github.com/AugustDev/enchanted) is also an iOS for private +AI models, but it requires a public-facing Ollama API, which did not meet my +"on device requirement." Nonetheless, it's an interesting looking app and I +will likely set it up to test soon. |