diff options

| author | Christian Cleberg <hello@cleberg.net> | 2024-04-27 17:01:13 -0500 |

|---|---|---|

| committer | Christian Cleberg <hello@cleberg.net> | 2024-04-27 17:01:13 -0500 |

| commit | 74992aaa27eb384128924c4a3b93052961a3eaab (patch) | |

| tree | d5193997d72a52f7a6d6338ea5da8a6c80b4eddc | |

| parent | 3def68d80edf87e28473609c31970507d9f03467 (diff) | |

| download | cleberg.net-74992aaa27eb384128924c4a3b93052961a3eaab.tar.gz cleberg.net-74992aaa27eb384128924c4a3b93052961a3eaab.tar.bz2 cleberg.net-74992aaa27eb384128924c4a3b93052961a3eaab.zip | |

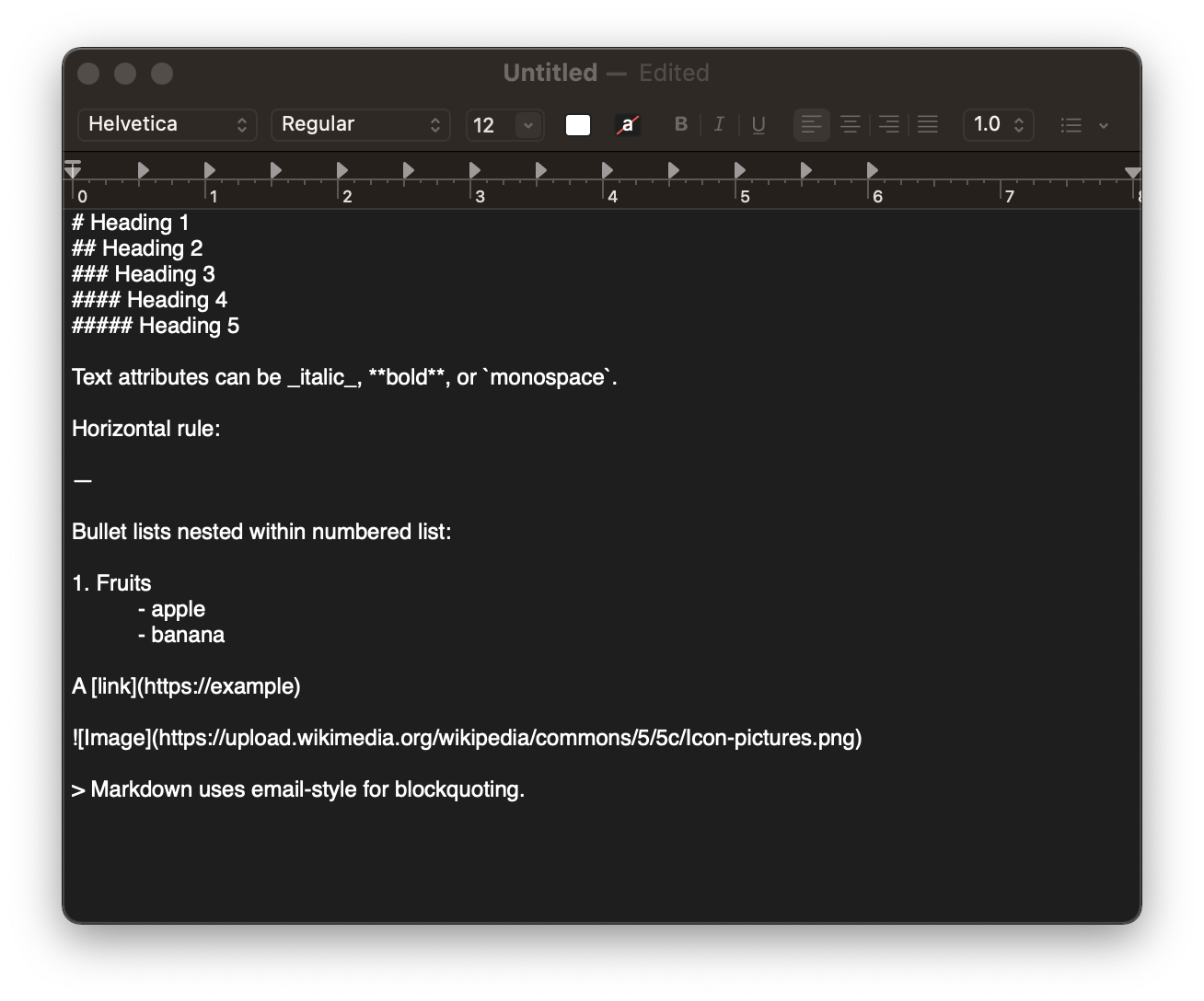

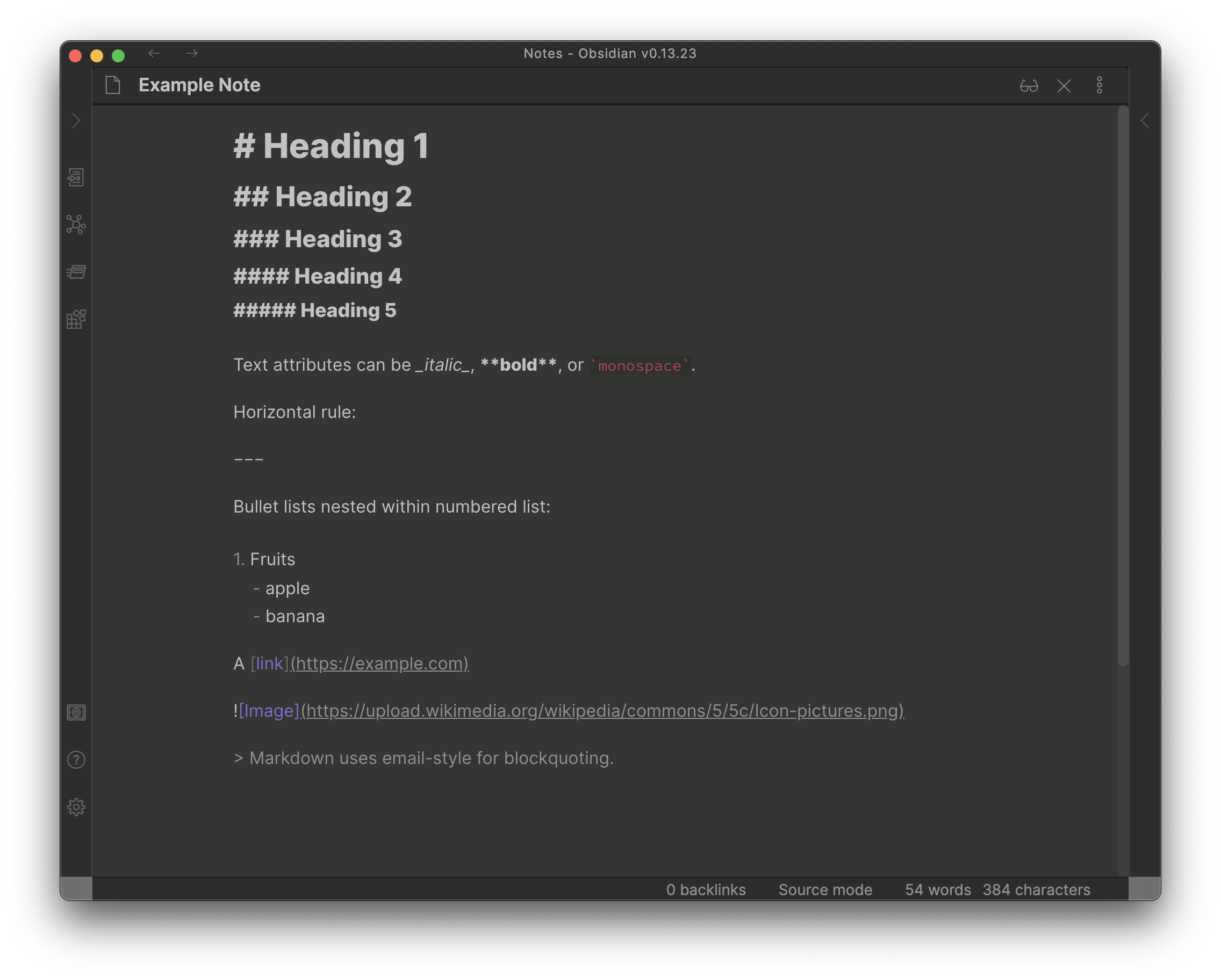

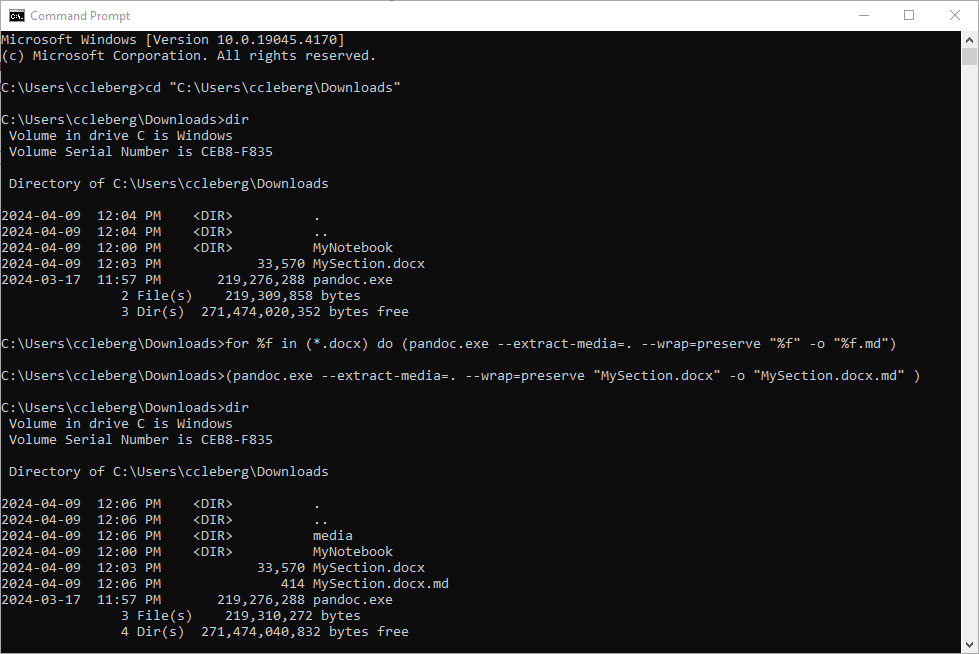

test conversion back to markdown

265 files changed, 16601 insertions, 14157 deletions

@@ -1,3 +1,3 @@ .DS_Store -.build .vscode +public